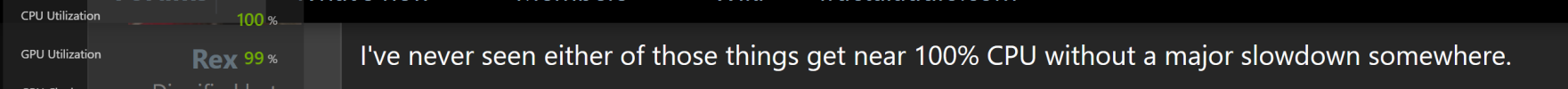

Btw, why is that "80-82%" the "safe" range for CPU consumption? I'm sure this has been explained/discussed elsewhere. I'm thinking this was a decision made in accordance with Fractal wanting to offer maximum flexibility to the user such that if you were, for example, in the studio, and wanted to run a preset that was dangerously high in CPU consumption you would have that option. No hard limit. Definitely don't want things crapping out during a performance though. Conceptually, the CPU meter seems to work more like "headroom". You want to leave some in reserve. It does sometimes trigger the OCD part of my brain which would prefer that as long as it didn't hit 100%, all operations on the FM9 would be good to go.

But why 80-82%? Which operations within a preset are most likely to cost a swing of an additional 20% of CPU usage; enough to hit 100% and render operation unstable? Why does it appear that the FM9 can become unstable before peaking at 100% CPU? Are there CPU consuming operations that are not reflected in the CPU meter?