The reason is that it is biased by the creator. Even if you had an AI developed by another AI, the bias would still be present since it can ultimately be traced back to a human creator. For something entertaining look up Ben Shapiro demonstrating how to force AI into a logistical corner by using its own bias against it.

The handful of examples I've seen of supposed stellar answers from AI tend to stay on or near the surface of an issue. My take is that some folks fully embrace it and give it too much credit. But that wasn't what I was referring to above. I was referring to questions of morals and ethics. If you haven't looked up Ben Shapiro yet, he makes an AI system contradict itself on the issue of sanctity of human life by exploiting the bias.I'm sure this chat bot isn't an AI apologist (paragraph 3), but it glosses over potential risks and problems -- like most humans.

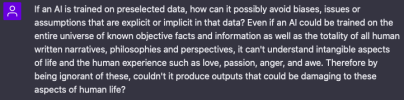

View attachment 113473

View attachment 113474

Will check it outIf you haven't looked up Ben Shapiro yet, he makes an AI system contradict itself on the issue of sanctity of human life by exploiting the bias.

I guess my read on this is that AI will be biased in all cases, including morals and ethics.I was referring to questions of morals and ethics.

I'm not arguing for libertarian or any other persuasion. I'm just pointing out that any AI will carry bias as it was ultimately designed by a human. That bias would then lead to tyranny if allowed to play out (allowing AI to determine policies, etc.). I think we're pretty close to being on the same page.@fcs101 If it was this one, I agree that it was generally forming (parroting) answers based on a kind of the cultural majority about certain ideas, e.g. female vs. male or baby vs. viable human being. Yet if the AI was based on a more libertarian or particular religious corpus of texts, it would parrot those ideas. It can only output something related to its training data.

I think taking a string of Q&A and finding "contradictions" wouldn't be hard with any chatbot or any human, and Shapiro's strategy is often trap people by their statements and self-contradictions. But we all have apparent and actual self-contradictions, especially in differing contexts.

What it does show is that with these chatbots there is no underlying "ontological" model of the world because it doesn't know the world, only our talking about it. And facts (or valid concepts) to one person or group may be disputed by another.

When people do have self-contradictions in their personal philosophy and value system, it's usually either driven by selfishness/self-interest or because they are guided by emotions and haven't logically thought it through.

The problem is that the AI is governed by someone, and that someone can be pre-biased, bribed or compelled to tweak what the AI does. What is says now is not necessarily going to be consistent forever.

Yeah. All NNs can do is look for features, associations, correlations etc in the training data based on training goals, and for NNs it's typically accuracy of classification.However at one point the researchers realized that the network was really looking for snow in the pictures and then said "wolf".

I do think AI can be a very useful tool, you just have to understand it is a tool. I've just seen some other posts (not in this thread) where people were ga ga over answers received, which I didn't find too enlightening.

Interesting. I tried to find this but couldn't find it. Do you have a reference?For example, the AI was recently censored from providing climate-change crisis counter-arguments

Where's the ranch dip?

@fcs101 If it was this one, I agree that it was generally forming (parroting) answers based on a kind of the cultural majority about certain ideas, e.g. female vs. male or baby vs. viable human being. Yet if the AI was based on a more libertarian or particular religious corpus of texts, it would parrot those ideas. It can only output something related to its training data.

I think taking a string of Q&A and finding "contradictions" wouldn't be hard with any chatbot or any human, and Shapiro's strategy is often trap people by their statements and self-contradictions. But we all have apparent and actual self-contradictions, especially in differing

Socrates/Plato was pointing out logical contradictions in humans long before AI was a thing. Thinking of the analogy of the cave in Plato's Republic. Perhaps the underlying question is how AI differs from humans in perceiving what is "truth" or "fact". Us humans become very invested in the belief that our viewpoint and/or opinions are an accurate reflection of what is real. How to approach this conundrum continues to be an issue that humans grapple with. What is the source of Truth? God, Spirit, biological determinism? Can a machine "comprehend" the mysteries of existence? It seems like the danger is giving AI the power to manipulate and control without addressing these deeper issues.@fcs101

I think taking a string of Q&A and finding "contradictions" wouldn't be hard with any chatbot or any human, and Shapiro's strategy is often trap people by their statements and self-contradictions. But we all have apparent and actual self-contradictions, especially in differing contexts.

Interesting. I tried to find this but couldn't find it. Do you have a reference?

There is also a huge difference between climate science using AI to detect trends and make predictions versus AI chatbots arguing for or against climate change.

The prior is based on data (yes, which can be biased or manipulated - but isn't necessarily) and latter is more like about constructing together a logical, narrative, or emotionally persuasive argument based on what humans have already written/said for or against climate change.

Great points.Socrates/Plato was pointing out logical contradictions in humans long before AI was a thing. Thinking of the analogy of the cave in Plato's Republic. Perhaps the underlying question is how AI differs from humans in perceiving what is "truth" or "fact". Us humans become very invested in the belief that our viewpoint and/or opinions are an accurate reflection of what is real. How to approach this conundrum continues to be an issue that humans grapple with. What is the source of Truth? God, Spirit, biological determinism? Can a machine "comprehend" the mysteries of existence?

Do you really think Truth is a human construct?Great points.

To riff on that, truth and fact are human conceptions with certain practical, social or moral utility or impact. A purely language based AI could talk about them like we do, but it couldn't determine "true facts" about the world on its own (e.g. anything outside its training data).

I don't think individual particular truths (or facts) are constructs as such (see my 3rd paragraph), but "truth" is a general construct, concept or label for lots of different ideas ranging from scientific to mathematical to religious. Different domains have different particular truths which might not apply to universally or to other domains. The term "love" is another linguistic construct that can indicate a huge range of feelings, ideas, acts and so on which can be largely personally or cultural determined.Do you really think Truth is a human construct?